티스토리 뷰

Source: www.kaggle.com/learn/intro-to-machine-learning

Learn Intro to Machine Learning Tutorials

Learn the core ideas in machine learning, and build your first models.

www.kaggle.com

Step 0: Setup

# Code you have previously used to load data

import pandas as pd

from sklearn.tree import DecisionTreeRegressor

# Path of the file to read

iowa_file_path = '../input/home-data-for-ml-course/train.csv'

home_data = pd.read_csv(iowa_file_path)

y = home_data.SalePrice

feature_columns = ['LotArea', 'YearBuilt', '1stFlrSF', '2ndFlrSF', 'FullBath', 'BedroomAbvGr', 'TotRmsAbvGrd']

X = home_data[feature_columns]

# Specify Model

iowa_model = DecisionTreeRegressor()

# Fit Model

iowa_model.fit(X, y)

print("First in-sample predictions:", iowa_model.predict(X.head()))

print("Actual target values for those homes:", y.head().tolist())

# Set up code checking

from learntools.core import binder

binder.bind(globals())

from learntools.machine_learning.ex4 import *

print("Setup Complete")

Step 1: Split Your Data

from sklearn.model_selection import train_test_split

train_X, val_X, train_y, val_y = train_test_split(X, y, random_state=1)sklearn.model_selection.train_test_split(*arrays, test_size=None, train_size=None, random_state=None, shuffle=True, stratify=None)

random_state int, RandomState instance or None, default=None

Controls the shuffling applied to the data before applying the split. Pass an int for reproducible output across multiple function calls. See Glossary.

train_test_split 함수는 입력을 random_state 옵션에 맞추어, train과 test의 2 묶음으로 나누어 준다.

입력이라 함은 하나가 될 수도 있고 여러개가 될 수도 있다. 입력의 타입은 list, nupy array, scipy-sparse matrix, pandas dataframe이다.

예시 코드에서는 X와 y를 넣어 주었으므로 리턴값은 X_train, X_test, y_train, y_test 이렇게 된다.

Step 2: Specify and Fit the Model

# step 0에서 필요한 라이브러리를 이미 import 했다.

# Specify the model

iowa_model = DecisionTreeRegressor(random_state=1)

# Fit iowa_model with the training data.

iowa_model.fit(train_X, train_y)

Step 3: Make Predictions with Validation data

val_predictions = iowa_model.predict(val_X)predict(X, check_input=True)

Predict class or regression value for X.

For a classification model, the predicted class for each sample in X is returned. For a regression model, the predicted value based on X is returned.

def predict(self, X, check_input=True):

"""Predict class or regression value for X.

For a classification model, the predicted class for each sample in X is

returned. For a regression model, the predicted value based on X is

returned.

Parameters

----------

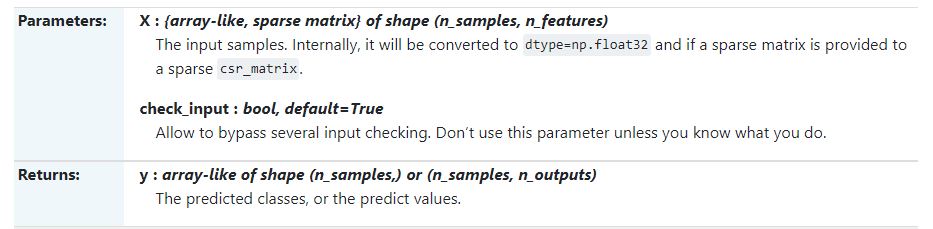

X : {array-like, sparse matrix} of shape (n_samples, n_features)

The input samples. Internally, it will be converted to

``dtype=np.float32`` and if a sparse matrix is provided

to a sparse ``csr_matrix``.

check_input : bool, default=True

Allow to bypass several input checking.

Don't use this parameter unless you know what you do.

Returns

-------

y : array-like of shape (n_samples,) or (n_samples, n_outputs)

The predicted classes, or the predict values.

"""

check_is_fitted(self)

X = self._validate_X_predict(X, check_input)

proba = self.tree_.predict(X)

n_samples = X.shape[0]

# Classification

if is_classifier(self):

if self.n_outputs_ == 1:

return self.classes_.take(np.argmax(proba, axis=1), axis=0)

else:

class_type = self.classes_[0].dtype

predictions = np.zeros((n_samples, self.n_outputs_),

dtype=class_type)

for k in range(self.n_outputs_):

predictions[:, k] = self.classes_[k].take(

np.argmax(proba[:, k], axis=1),

axis=0)

return predictions

# Regression

else:

if self.n_outputs_ == 1:

return proba[:, 0]

else:

return proba[:, :, 0]

# validation prediction 상위 5개만 출력하기

print(val_predictions[:5])

# validation data에서 진짜 price 4개만 출력하기

print(val_y.head())n개를 선택할 때에, val_predictions[:n] : 상위에서 n개만 출력한다.

Step 4: Calculate the Mean Absolute Error in Validation Data

from sklearn.metrics import mean_absolute_error

val_mae = mean_absolute_error(val_predictions, val_y)

# uncomment following line to see the validation_mae

print(val_mae)

# Check your answer

step_4.check()'문제' 카테고리의 다른 글

| fmincg Machine Learning. Andrew Ng. Ex3 (0) | 2021.01.28 |

|---|---|

| 문제를 똑바로 보자 (0) | 2021.01.28 |

| kaggle Exercise:Working with External Libraries (0) | 2021.01.02 |

| 여러 개의 선택 중 단 하나만 골랐는가 (0) | 2020.12.30 |

| 1208 부분 집합2 (power set) (0) | 2016.11.21 |